Microsoft Azure Kubernetes Service, Part 3 Manually scaling AKS clusters

Via the Azure Kubernetes outstaffing Service, or AKS for short, we have already provided an application per container cluster in this workshop. We look at the scaling of Kubernetes clusters in general and AKS clusters in particular in this part.

Companies on the topic

Nodes and pods in a container cluster can be scaled quite easily by hand with the Azure Kubernetes Service.

Nodes and pods in a container cluster can be scaled quite easily by hand with the Azure Kubernetes Service.

(Image: Drilling / Microsoft)

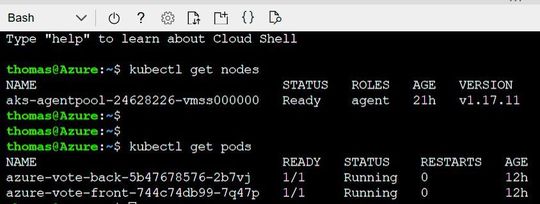

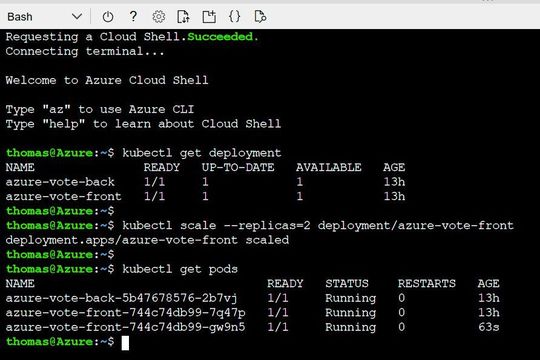

The node pool is ready, our application currently consists of two pods for frontend and backend.

The node pool is ready, our application currently consists of two pods for frontend and backend.

(Image: Drilling / Microsoft)

Similar to the second part of this series, let’s start again by showing the number and distribution of node pools and nodes in the cluster in order to control the connectivity of the AKS clusters created at that time. For this purpose, we set the kubectl command “get nodes” :

kubectl get nodes

Now we determine with the command …

kubectl get pods

… , how many pods our application consists of as well as the IDs of the respective pods together with the respective pod status.

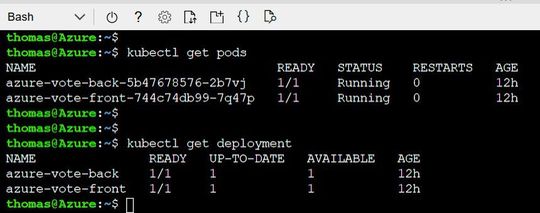

Our Deployment consists of a Frontend and a Backend.

Our Deployment consists of a Frontend and a Backend.

(Image: Drilling / Microsoft)

Furthermore, we could determine the status of our deployment at any time, this succeeds with the command:

kubectl get deployment

Now we want to look at the scaling options in AKS and try out some types of manual scaling. But we should also accumulate some background knowledge.

Scaling in Kubernetes and AKS

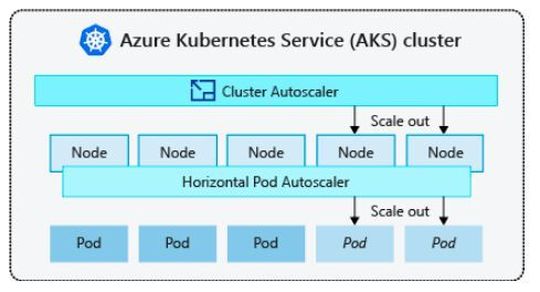

Since applications can grow beyond the capacity of a single pod, Kubernetes has automatic cluster scaling. This is based on adding compute resources.

By default, automatic cluster scaling checks the metric API server every ten seconds for a potentially required adjustment of the number of nodes. If scaling is required, the number of nodes in the AKS cluster is automatically increased or decreased. In addition, Kubernetes has a horizontal pod autoscaler (HPA) that scales based on metrics and works hand in hand with the cluster autoscaler.

Kubernetes uses horizontal automatic pod scaling to monitor resource requirements and to automatically scale the number of replicas. By default, horizontal automatic pod scaling checks the Metrics API every 30 seconds for required changes in replica count.

The scaling options in AKS.

The scaling options in AKS.

(Image: Microsoft)

Generally, horizontal automatic pod scaling increases or reduces the number of pods as required by the application. Automatic cluster scaling, in turn, changes the number of nodes to meet the capacity requirement for additional pods. So if the number of application instances required changes, the number of underlying Kubernetes nodes may also need to be changed.

However, AKS admins can also manually scale the number of replicas (pods) and nodes at any time to test how the application responds to a change in available resources and status. With manual scaling, you can define and force a fixed number of resources to be used at any time, such as the number of nodes. For manual scaling, you define the number of replicas and/or nodes.

The Kubernetes API then plans to create additional pods or remove nodes based on this replica or node count. Specifically, when scaling nodes horizontally, the Kubernetes API calls the relevant Azure compute API that is bound to the compute type used by the cluster. For example, the AKS cluster could be based on VM Scale Sets, which determines the logic for selecting the nodes to be removed using the “VM Scale Sets”API.

Cooling the scaling of events

Since horizontal automatic pod scaling checks the metrics API every 30 seconds, scaling events may not be completed successfully before another check is performed. Thus, the horizontal automatic Pod scaling could change the number of replicas before a previous scaling event was able to receive a resource request and adjust accordingly.

To minimize such events, the admin can set a delay value. This value defines how long the horizontal automatic Pod, scaling a scaling event will have to wait before another scaling event can be triggered. This behavior allows the new replica count to take effect and the metrics API to reflect the distributed workload.

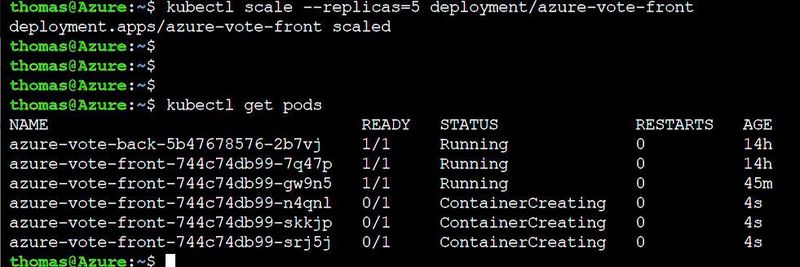

Manual scaling of pods.

Manual scaling of pods.

(Image: Drilling / Microsoft)

Let’s now look at manual scaling with kubectl. We first increase the number of replicas to 2. For this we need the name of the deployment determined above:

kubectl scale --replicas=2 deployment/<Deployment Name>

We then check the result with

kubectl get pods

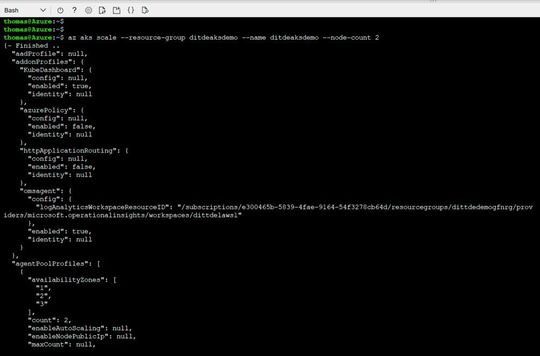

The manual Scaling of nodes.

The manual Scaling of nodes.

(Image: Drilling / Microsoft)

Now we want to increase the number of nodes in the cluster. For this purpose, however, we use the AKS command level in the form of the command “az aks scale”.

az aks scale --resource-group <Name Ressource-Group> --name <Cluster Name> --node-count 2

We check the result again with the get command:

kubectl get nodes

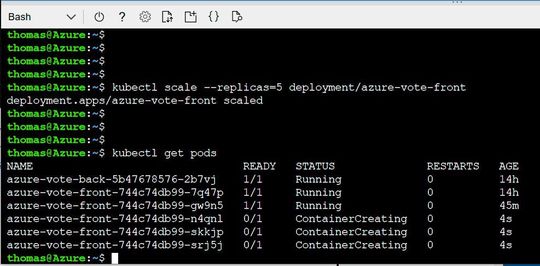

Once again, we increase the number of replicas in the pod autoscaler.

Once again, we increase the number of replicas in the pod autoscaler.

(Image: Drilling / Microsoft)

Finally, we once again increase the number of replicas to 5 and check the result.

kubectl scale --replicas=5 deployment/<Deployment Name>

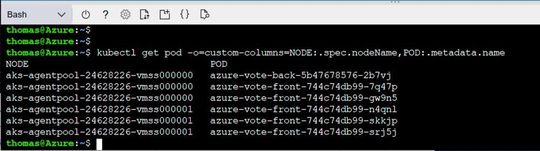

The distribution of pods.

The distribution of pods.

(Image: Drilling / Microsoft)

We can also look at the distribution of pods across the cluster as follows:

kubectl get pod -o=custom-columns=NODE:.spec.nodeName,POD:.metadata.name

If you have experimented enough, you can use the full deployment …

kubectl delete deployment nginx-deployment

… remove from cluster.

(ID:46971631)