Fuzzy Testing vs. Static Code Analysis Is modern fuzzing the future of DevSecOps?

Behind the term DevSecOps is the idea that each team member of a software project is responsible for the development, operation and security of the entire software Team project. Static code analyses, test generators and numerous machine learning approaches promise an increased degree of automation.

Companies on the topic

Looking at the possibilities and opportunities that fuzzing offers, no software should be delivered that contains errors that could have been prevented by fuzzing.

Looking at the possibilities and opportunities that fuzzing offers, no software should be delivered that contains errors that could have been prevented by fuzzing.

In application security Testing, static code analysis (Static Application Security Testing, SAST) is mainly used. The source code is scanned without actually executing it. The focus of the search is on suspicious patterns in the control/data flow, which are tracked with the help of heuristics and fixed rules. Those code points that match suspicious patterns and could indicate potential vulnerabilities are presented to the developer as suspicious or as bugs.

Since SAST tools do not execute the code, they are easy to integrate and are therefore used in almost every software project. In addition, static code analysis can be used in almost every Phase of the development process. In the best case, it is already running in the development environment to detect vulnerabilities in unfinished code before it is checked into the repository.

One of the biggest drawbacks of static analysis is that it produces numerous false positives and false negatives. Thus, warnings are issued, although no real vulnerabilities are contained in the code. At the same time, numerous vulnerabilities are overlooked, which then (not) occur during execution. In practice, large projects can easily receive many thousands of warnings (of which only a small percentage are actual errors), which in the worst case scenario need to be checked manually before a software can be released.

This, in turn, entails enormous usability problems, resulting in the fact that results from static analysis are often taken less seriously by developers and thus even the topic of security is thus neglected. A common solution strategy is to outsource the analysis of the warnings to external consultants. However, such an approach contradicts the basic philosophy (“multi-functional team”) of DevSecOps, since the responsibility for security may be transferred to third parties with a lack of domain knowledge.

Many software errors could already be avoided with a static analysis. However, the effectiveness and efficiency of this procedure is complicated by usability limitations, such as the frequent occurrence of false positives and negatives. During the execution of the code, there are crashes and security problems that could not be found in the analysis.

The question therefore arises whether the sole use of static code analysis in the CI/CD is sufficient to ensure the safety of a product and the effectiveness of the DevSecOps teams reviewing it? Many organizations opt for static code analysis because of the simple integration and associated impression, safety and quality measures to have taken. However, further testing approaches are required in the pipeline in order to ensure continuous and high-quality projects.

Fuzzing – Dynamic code analysis for DevSecOps

Dynamic code analysis, which, in contrast to static code analysis, tests the application during its runtime and executes it with input values, is still in its infancy in the DevSecOps area. However, modern fuzzing, which is also called feedback-based, coverage-based and instrumented fuzzing, is becoming the most effective tool for automated testing among security researchers, software testing experts and technology leaders such as Google.

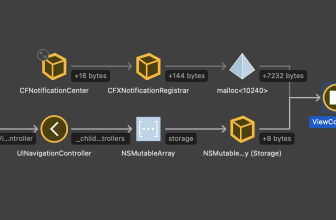

In the case of fuzzing, the software to be tested is executed with a series of input values that are specifically mutated in the subsequent process. The mutation is based on feedback about the code covered on the execution path, instructions that have occurred, and the different states that the test tool receives during the execution of the inputs. The decisions of the fuzzing engines can thus lead iteratively to more meaningful mutations in the subsequent runs. In contrast to traditional or black box fuzzing, modern fuzzing effectively explores the state of the program and detects errors that are hidden deep within the code (e.g. in marginal cases that have been forgotten in manual testing).

A look at the technology leaders, such as Google and Microsoft, shows that modern fuzzing techniques are already used almost everywhere to test your own code for security and reliability. For example, more than 27,000 errors were found in Google Chrome and several open source projects. In 2019, Google stated that it now finds 80 percent of its bugs using fuzzing. Fuzzing is already automatically integrated into your CI/CD pipelines and demonstrates the effectiveness of fuzzing in detecting bugs and vulnerabilities.

Just like unit and system testing, Fuzzing has the potential to change the way software is tested worldwide. Developers, need less capacity in unit tests to cover a range of possible edge cases. Instead, DevSecOps teams can set up fuzz tests that automatically generate many unbiased test cases. Fuzzing can massively reduce development costs by improving the effectiveness and efficiency of developers. Nevertheless, it makes sense to combine fuzzing with static analysis, e.g. to facilitate the finding of external interfaces that can be fuzzed automatically and to enable the maximization of code coverage during test runs.

Use the given potential

With the exception of technology leaders, only a few companies in Europe rely on the promising fuzzing technologies. Instead, (pen) testing continues to take place mainly manually during acceptance with complex Privacy and Security Assessment (PSA) processes. This leads above all to two consequences:

- 1. The use of expensive Pentester-resources leads to unnecessarily high and avoidable costs. Although pentesters are essential for the final acceptance tests, their manpower should not be wasted on dealing with errors that could be found pre-emptively automated with fuzzing.

- 2. Bugs and vulnerabilities are not detected and eventually even get into production. This is because application security without Fuzzing is only as good as the Pentester itself. Fuzzing has been proven to find bugs and vulnerabilities in software that have been classified as secure even after successful reviews by well-trained security experts, successful static code analysis and pentests by renowned bughunters. This is particularly evident in projects such as the Linux kernel and Google Chrome, which have a certain size and a particularly high level of complexity.

Why then is fuzzing hardly used, except for the leading companies in DevSecOps? Open source Fuzz testing tools, such as american fuzzy loop (AFL) or libFuzzer are still completely unknown in most teams, the usability is still rated as too bad, and above all there is criticism of the integration effort. At this point, the market is already responding, more and more commercial providers are already offering modern fuzzing in DevSecOps for different domains.

Whether it will actually work to effectively integrate fuzzing into DevSecOps will depend crucially on whether these integration and usability difficulties can be addressed in the coming years. Because only if this is possible, “Sec” can be effectively and reliably combined with “DevOps” in the end.

Looking at the possibilities and opportunities that fuzzing offers, no software should be delivered that contains errors that could have been prevented by fuzzing. Attackers also use all the possibilities that are available to them. You can also use fuzzing techniques to find and exploit errors in the software. In particular, you can exploit it when the executable software is in possession. As a software manufacturer, however, you have an enormous home advantage over the attacker by owning the source code and the associated instrumentation and should use this as well.

About the author: Sergei Dechand is CEO and co-founder of Code Intelligence. The company attaches great importance to making complex software testing methods easier for the developer. Sergej has gained relevant experience as an external software developer and IT consultant for various DAX companies and as a project manager at Fraunhofer FKIE. In addition to his duties at Code Intelligence, he is actively involved in research in the field of usable Security at the University of Bonn, where he also works as a lecturer.

(ID:46995923)