You can read more about developing and building your own platform for customers and business partners in this article. […]

“Everything-as-a-Platform” is probably one of the most frequently heard paradigms of the digital world. Some of the largest companies such as Amazon, or even newer players on the market such as Shopify, are based on the platform business model. But what does this mean? In essence, it means that digitization is also making its way into the B2B business and more and more customers of many companies, but also the companies themselves, are looking for the way through digital platforms to exchange ideas.

In orchestration, it is not always only the price that is decisive. It is often about the seamless integration of products and services of companies into the world of experience (or New German: the ecosystem) of customers or even processes of companies. All this is already known from the private environment: products are bought online from Amazon or Otto and the music comes as a stream from Spotify or Apple.

Many of those responsible are sitting like a rabbit in front of the queue, in the hope that everything will continue as before and their own company will be spared from disruption by competitors. Managers often leave the creative field to third parties or competitors. Those who pick themselves up at short notice out of necessity often then seek their salvation in standard systems without suspecting that they may not be able to meet their own USP requirements.

The cooperative association – Association of the Regions – shows that it can be done differently. “In the midst of the pandemic, we developed our own tailor-made solution for digital interaction with our clients with the easyGeno examination platform and successfully put it into production. And all this at a record speed of just a few months!” says Board member Peter Götz.

As part of the audit of cooperatives, interaction with clients was digitized with the help of an individual platform – this taking into account the highest requirements for security, the sensitive data associated with this line of business. The former exchange of many paper documents can now be done digitally, which not only leads to time savings, but has supported both contactless and decentralized work, especially in pandemic times.

Thus, the time was used by the cooperative association under the motto “Crisis as an opportunity” to generate a unique selling proposition. In the future, the platform will also offer the possibility of further automating internally (because the information is already available digitally) and gradually expanding the range of services externally.

Many companies already have established systems for isolated tasks in-house. Prominent examples are CRM and ERP systems, which also work wonderfully in their ancestral fields of sovereignty and core functions. However, they are often not aimed at interacting with customers or business partners. But it is precisely the interaction and usability that must be thought out by the user and the user. It is by no means sufficient to simply import and export data from internal systems or to dock with them to the customer’s systems.

The use of standard systems is roughly comparable to a business card. A company can of course choose a standard from the catalog – knowing that many other companies use the same standard. However, due to the lack of differentiation, no miracles are to be expected with regard to the effect. If we transfer the example to the digital customer interface – the digital face of the company – it becomes clear why the “one size Fits all” approach is not very promising here: if the customer-oriented unique selling propositions are missing compared to the competition, no new customers will come to the platform – why.

Regarding the implementation of your own platform, one thing must be said in advance: speed is everything. In order to get speed on the road, interdisciplinary cooperation and the participation of experts from different disciplines are required – comparable to a Formula 1 team. It is not for nothing that the authorship of this article is also composed of management-oriented subject matter experts, experts in software development and those in IT operations.

Only with a targeted “expert mix”, so to speak, can an individual, digital platform be developed from 0 to 100 within a few months and transferred to operational operation, without ending up in a complex IT project lasting many years.

The path to your own platform typically follows 8 steps:

- Finding and creating a digital experience from the customer’s point of view

- Make a benefit assessment to determine the ideal point of view

- Put together the right team

- Align and accelerate procedures to result types

- Think about IT and data protection from the very beginning

- When choosing the operating model, also consider cloud possibilities

- Use the opinion of users and users for further development

- Continuously expand and scale the platform to include useful functions

The focus of the activities is on the development and implementation of the platform. In advance, however, the differentiation-relevant “digital experience” must first be described from the customer’s point of view. Of course, this includes a consideration of the expected benefits. It is not always possible to determine this directly in monetary terms. After all, how can the viral network effect of an “action” be reliably predicted in advance and then evaluated in monetary terms on top of that?

The core elements for the realization then consist in the compilation of the right team along with orchestration and sourcing of content expertise, specialists in software development with UX / UI experts as well as the experts for the construction and operation of the platform infrastructures in the spectrum from on premises to cloud.

Based on an agile approach, visible work results (MVPs) can then be developed quickly and iteratively. Cyber security and data protection requirements should be included in the consideration from the very beginning in coordination with the relevant responsible persons. These topics are often deeply anchored in the logic and can often only be improved later with a high restructuring effort in the architecture. This applies equally to the operating model, which is often implemented in the form of software-defined data centers or hyperconverged infrastructures.

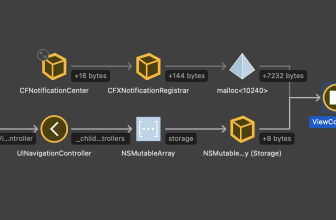

The result is a microservice-based application architecture that is operated on state-of-the-art infrastructure. Looking ahead, a company based on such an operating model is then well equipped for possible scaling projects: more functions, more users, more products, more target markets – towards an ecosystem. However, the way to get here should definitely be based on the user’s opinion and their wishes, so as not to run into a dead end.

Those who now think that they can succeed single-handedly have already lost. The success lies in applying the principles of the network already during development. Very few companies have the combination of experts in sufficient numbers to put together a “Formula 1” team here from the green field. And for most, it is also not worthwhile to build up corresponding teams permanently by hiring them permanently – because not every company is like ING Diba or Spotify. That is why there are now so-called consulting ecosystems, which, in a combination of several providers, bring the right experts to the start. When will you start using your platform?

*Oliver Laitenberger heads the Competence Center Digitalization and Technology at the management consultancy Horn & Company.

**Dr. Seebach accompanies banks and insurance companies in the implementation of groundbreaking digitization projects. He studied economics at the Goethe University Frankfurt (Main) and received his doctorate there in the field of information systems research. He now has 15 years of consulting experience in the financial services sector.