Hello everyone, today I will show you how to write a palm recognition system in Python + OpenCV (26 lines of code). This tutorial requires minimal knowledge of OpenCV.

What we will get at the output

Approximately this:

Useful links

OpenCV Website

Mediapipe documentation

Code on GitHub (No comments)

Before work

You will need:

- Python 3;

- OpenCV;

- mediapipe.

Installation takes place through the package manager:

pip install opencv-pythonpip install mediapipeCode

I decided to use mediapipe because it already has a trained AI for palm recognition.

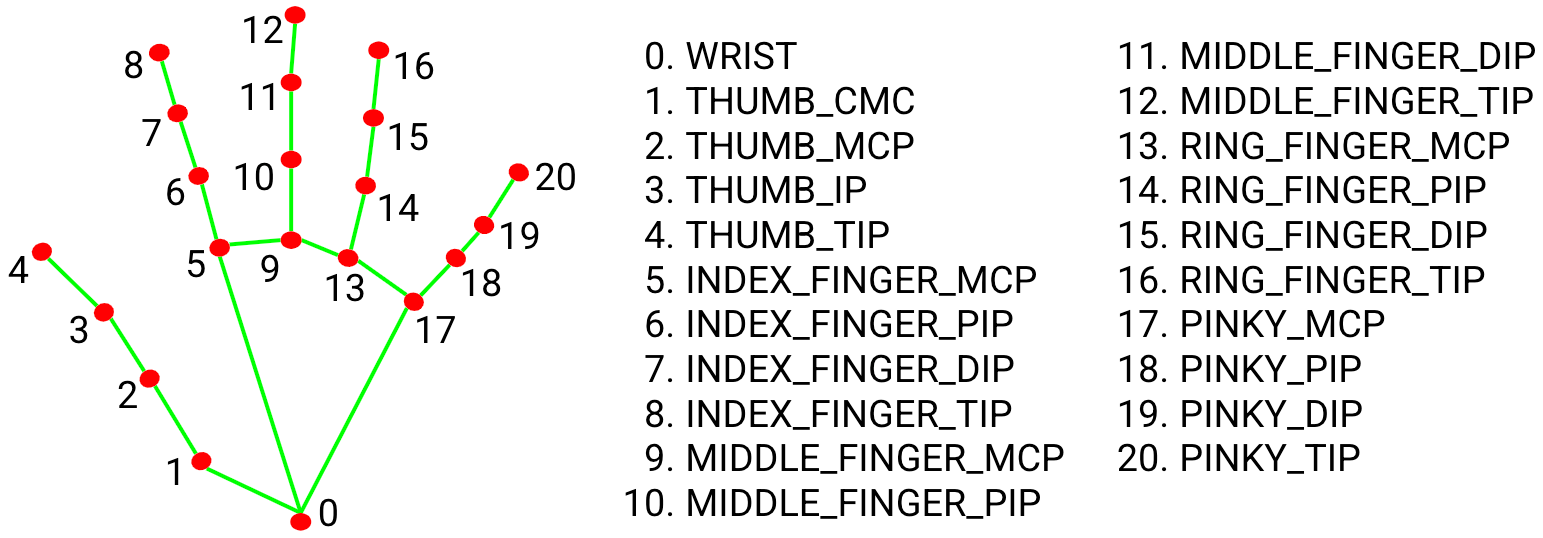

To begin with, you need to understand that the palm consists of joints that can be expressed with dots:

Next, we write the code itself:

import cv2

import mediapipe as mp

cap = cv2.VideoCapture(0) #Камера

hands = mp.solutions.hands.Hands(max_num_hands=1) #Объект ИИ для определения ладони

draw = mp.solutions.drawing_utils #Для рисование ладони

while True:

#Закрытие окна

if cv2.waitKey(1) & 0xFF == 27:

break

success, image = cap.read() #Считываем изображение с камеры

image = cv2.flip(image, -1) #Отражаем изображение для корекктной картинки

imageRGB = cv2.cvtColor(image, cv2.COLOR_BGR2RGB) #Конвертируем в rgb

results = hands.process(imageRGB) #Работа mediapipe

if results.multi_hand_landmarks:

for handLms in results.multi_hand_landmarks:

for id, lm in enumerate(handLms.landmark):

h, w, c = image.shape

cx, cy = int(lm.x * w), int(lm.y * h)

draw.draw_landmarks(image, handLms, mp.solutions.hands.HAND_CONNECTIONS) #Рисуем ладонь

cv2.imshow("Hand", image) #Отображаем картинкуError Solution: ImportError: DLL load failed can be found here.

There are comments in the code, but I propose to analyze in detail:

OpenCV and mediapipe imports.

import cv2

import mediapipe as mpThe necessary objects are: a camera (cap), a neural network for determining palms (hands) with arguments max_num_hands — the maximum number of palms — and our “drawing machine” (draw).

cap = cv2.VideoCapture(0)

hands = mp.solutions.hands.Hands(max_num_hands=1)

draw = mp.solutions.drawing_utilsAn infinite loop.

while True:Exit the loop if the number 27 (Esc) key is pressed.

if cv2.waitKey(1) & 0xFF == 27:

breakWe read the image from the camera, reflect the image vertically and horizontally, convert it to RGB. And, most interestingly, we give our picture to the definition of palms.

success, image = cap.read()

image = cv2.flip(image, -1)

imageRGB = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = hands.process(imageRGB)The first if block checks whether palms were found at all (any non-empty object is true). Next, using the for loop, we “iterate” the object with a set of these points. The second for — iterate over the points themselves from the set

draw.draw_landmarks is a handy utility that draws a palm on an image, in arguments: an image, a set of dots, and what we draw (in our case, a hand).

if results.multi_hand_landmarks:

for handLms in results.multi_hand_landmarks:

for id, lm in enumerate(handLms.landmark):

h, w, c = image.shape

cx, cy = int(lm.x * w), int(lm.y * h)

draw.draw_landmarks(image, handLms, mp.solutions.hands.HAND_CONNECTIONS)Displaying a picture.

cv2.imshow("Hand", image)Result

We wrote palm recognition in Python in 26 lines of code, cool, isn’t it? Rate this post because it’s important to me.