More efficiency through Software Delivery Management, Part 2 Overarching transparency and streamlined processes

Continuous delivery has revolutionized software delivery practices. But the successes associated with DevOps methods must also be measured and made visible so that software companies can fully benefit.

Companies on the topic

Software Delivery Management supports all DevOps stakeholders with comprehensive data, transparent insights and best practices.

Software Delivery Management supports all DevOps stakeholders with comprehensive data, transparent insights and best practices.

The creation of mature CI/CD (Continuous Integration/Continuous Delivery) pipelines has enabled companies to develop software that performs better based on a long list of parameters. Failure rates have dropped from 50 percent to less than 15 percent, and deployment cycles are now measured in hours, not weeks.

Ever since Jez Humble and David Farley popularized the notion of continuous delivery in 2010, companies and products are reaching market maturity faster and with fewer defects. However, the possibilities of CI and CD are limited: the approaches only link the areas of the software lifecycle that take place within DevOps.

Continuous integration and delivery, on the other hand, provide no insights, …

- where the deployment cycle is disrupted ,

- whether the software helps the customer meet their internal performance indicators (KPIs) ,

- how the new software behaves in the application, or

- whether the version released last week will reach the level of efficiency that the software company predicts and promised its customer.

A more holistic approach

In this context, a more holistic approach to software deployment can help. As discussed in the first part of this miniseries, more and more companies recognize software deployment as a core business process and plan to integrate it into it. Accordingly, all aspects are to be intertwined into a new, coherent discipline.

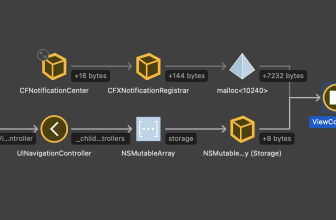

This discipline, called Software Delivery Management (SDM), sets new rules: It goes beyond the concept of CI and CD, empowers developers and other stakeholders to work together most effectively and provides assistance in solving problems creatively. It provides all stakeholders with comprehensive data, transparent insights and best practices. These make it possible to really continuously improve, to ensure value retention, to efficiently develop the right products and features and to deliver them to customers.

For the ideal implementation of the SDM principle, a management solution must be based on four essential aspects: common data, universal transparency, interlinked processes and collaboration between all departments.

- Data: All information within and around software delivery activities would need to be captured and stored with a consistent domain model to enable and facilitate interrelated processes, shared insights, and collaboration.

- Insight: Transparency and accessibility should enable understanding and continuous learning from shared data accessible across all areas across the organization.

- Related processes: The processes should orchestrate the entire value creation of the software deployment and connect all work areas to bring ideas to the market efficiently and with maximum added value and acceptance.

- Cooperation: All departments and teams involved in software delivery, inside and outside the organization, work together to optimize value creation processes.

The benefits of SDM

This new approach helps software companies in many ways. There are three benefits that DevOps managers can benefit from in terms of how they work, how they collaborate with development teams, and how they connect with colleagues in their organizations.

1. They develop faster.

The current process can be slow and tedious. Developers use tools that are configured only for a specific activity, and it’s difficult to get a holistic view across the entire product chain. An SDM solution captures all artifacts and data from various tools involved in the development of the software from idea to deployment in a unified, common data layer.

All this information is interlinked and available in generic formats anytime, anywhere. The availability of this correlated information alone saves a lot of time searching for data to check status and troubleshoot throughout the development process.

2. You create better Software.

If stakeholders don’t have insight into the development and deployment process, they can’t know if the software meets the company’s business goals. With SDM, the continuous feedback loop established with CI/CD and DevOps is expanded to fully engage all stakeholders from the idea generation phase to user adoption and back to idea generation. The insights are logged and shared on a broader basis, allowing developers to more efficiently deliver what users want-right from the first time.

3. They work better together.

The development teams and management do not always work well together for a number of reasons. Developers often do not have a good sense of how a product should be used, and management usually does not have a detailed insight into what is currently being developed or when it will be completed.

To make matters worse, the communication processes are scattered – both sides have to work with confusing documents as a basis for information, communicate via e-mails and wait for personal meetings to advance the processes. SDM breaks through the previous silo thinking of the software department and other stakeholders, so that both sides can exchange information, communicate more directly and develop solutions together.

Anders Wallgren (Image: CloudBees)

In this way, software delivery management becomes a real game-changer for all software-driven developments and innovations and picks up on an important trend of our time: connectivity at all levels.

* Anders Wallgren is Vice President of Technology Strategy at CloudBees. He has more than 30 years of in-depth experience in designing and developing commercial software. Prior to joining CloudBees, held Wallgren leadership positions at Electric Cloud, Aceva, Archistra and Impresse. As a Manager at Macromedia, he was instrumental in solutions such as the Director, and different Shockwave-products.

(ID:46836143)